This text is my personal opinion, developed by researching publicly available sources such as research publications and rumors. I did not and do not work in any of the companies whose current or future products this text speculates about.

Intended audience: people with engineering experience or some basic ML knowledge who are interested in language modeling techniques that may have been selected for implementation by “GPT-4” authors from OpenAI. We need such speculation, because the authors have elected to keep the technical detail private, citing safety concerns and competitive landscape. The bulk of this text had been written in the first days of March, when actual capabilities of GPT-4 remained an enigma.

TL;DR of hypotheses developed in this post (note that some references postdate the GPT-4 pretraining run; but they either have precedents in literature, or are assumed to be known privately in advance of publication, as has been the case with chinchilla scaling law, for example)):

- (High confidence) Intra-tensor sparsity from Google’s Scaling Transformer and Terraformer. This alone provides an OOM inference performance improvement, allowing the system to break through the memory bandwidth wall.

- (High confidence) MoE with routing, similar or more advanced than in Google’s switch Transformer 2 (i.e. coarse-grained FFN layer sparsity). This improves inference performance in synergy with the former technique.

- (High confidence) Some variant of efficient attention; could be just an LSH attention from Terraformer or a more conservative parameter-efficient convolutional attention from Scaling Transformer from the same paper. In the base case it just improves inference performance in synergy with the former techniques, but in the more interesting case it might also improve asymptotic performance - paving the way to 8k…32k context window size at a bearable cost.

- (High confidence) UL2 pretraining objective for improved scaling law (note how we haven’t seen the UL2-Chinchilla just yet).

- (Confirmed) “Image-enabled multimodality” similar to Aleph’s lumionous, but with heavy prioritizing of plaintext over image modality, with the vast majority of pretraining compute spent on processing text tokens - as neccessiated by lackluster multimodal scaling laws. The pretraining could even be text-only, with vision bolted on later during finetuning.

- (High confidence) A new dataset with on the order of 10 trillion tokens, with significant part of it consisting of licensed material (could be youtube transcripts computed with help of Whisper and a large amount of books).

- (High confidence) muTransfer for efficient hyperparameter estimation.

- (Moderate confidence) Advanced positional encoding for less attention noise and runtime scaling beyond the context window size used for pretraining. Notably, also applied in Microsoft’s KOSMOS-1.

- (Moderate confidence) Encoder-Decoder architecture, for better scaling and UL2 synergy. This could explain the special nature of “system” prompt.

- (Moderate confidence) Retrieval-augmented language modeling for enhanced scaling (see Meta’s ATLAS).

- (Low confidence) Some nontrivial form of dataset pruning and/or reordering to maximize pretraining sample efficiency and enhance the resulting scaling law.

This list doesn’t include engineering-heavy post-training enhancements; I simply expect world-class ML engineering with all the best model compression and inference optimization techniques stacked together.

Below is my interpretation of the recent history and incentives in this burgeoning field, and how I came to these guesses.

Introduction

- As of 2023, Language Models (LMs) have claimed an ever-growing amount of attention across wide swathes of society: groups as different as enthusiastic hackers, public intellectuals, corporate strategy execs and VC investors all have some stake in the future of LMs.

- The current trajectory of LM progress depends on four pillars:

- Scaling: few entities investing significant resources (examples: 1 2 3) to make training runs of novel large models possible. Notably, these few entities serve as gatekeepers, deciding which model architectures, on which datasets and for which applications are trained at all. The basis for these decisions is multi-factored, with risk considerations (technical, reputational) being a significant factor. We will pay particular attention to technical risks of including some techniques in the training run and, on the other hand, to de-risking - that is, large-scale validation of techniques either originating in research publications or privately known.

- Algorithmic progress: research on improving compute-, parameter efficiency and expanding the applicability of deep learning to novel modalities, applications and abilities. Notably, this is a minor subset of DL research, seeing as much of it doesn’t prove worthy of scaling (see below) - which in turn is a subset of ML research. Some approaches to measure algorithmic progress study combinations of enhancements for some fixed problem or model architecture: AI and Efficiency (OpenAI), Deep Neural Nets: 33 years ago and 33 years from now (Karpathy’s blog), EfficientQA challenge (Google), MosaicML Composer documentation.

- Engineering: broadly understood as the development of systems making the first two pillars possible at scale, such as deep learning frameworks DLF1 DLF2, parallelism schemes DLPS2 and associated engines and compilers.

- Hardware: arguably could be merged with engineering, but it is sufficiently insulated to be its own field and professional community, and in a certain long-term sense it guides the evolution of the former three pillars - some academics have coined the uncharitable term “hardware lottery” to describe this path-dependent dynamic.

- And what looks poised to become a fifth pillar: impending regulation.

Just a few years ago this structure was not yet in place, likely owing to “inertia” associated with fundamental technical and economical risks of investing significant (by the standards of usual software engineering or academic ML) resources in this unproven field.

This inertia had been broken by a few companies and associated private research organizations. Arguably, the most important events that changed the outlook for this nascent industry were unprecedented research milestones, demonstrating reliable improvement of model performance with scaling data, parameters and compute. For the first time it was shown that training ever larger language models could lead to ever more impressive tools with emergent functionality responsive to natural language input provided at runtime. It was also demonstrated that large enough language models trained on general datasets can be quickly finetuned to become competent at specific tasks. After those original publications and a tech demo, an exponential stream of novel milestones (DALL-E, Jukebox, GLIDE, Chinchilla, Flamingo, etc) continues to de-risk entire new subfields, making them attractive avenues for investment by more cautious competitors. Something could be said here about comparative novelty and priority of Google/Deepmind’s and OpenAI’s research and perhaps the unfair amount of attention and industry leadership OpenAI gets with its publications.

So far, most of scaling DL research, especially in language modeling, has been done with dense Transformer variants - and while we have seen the algorithmic progress in objective function (UL2, FCM), optimizers (Lion) and some small tweaks of the base architecture, it mostly remained in place, at least in experiments that were tried at scale. Some smaller-scale work is more interesting (H3, Hyena, Kosmos). Obviously, not every promising and technically interesting technique proves its potential at scale, or even should be scaled.

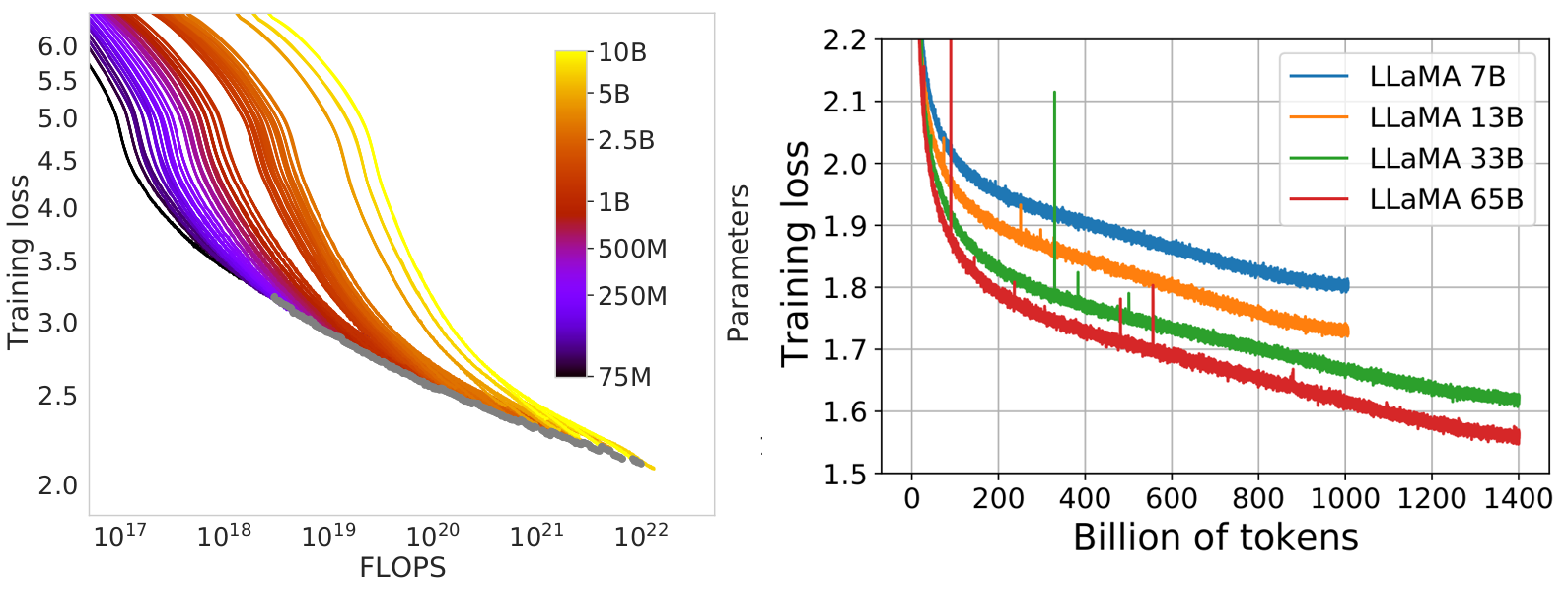

The takeaway is that, very likely, the “GPT-4” model is – in sharp contrast to its predecessors – implemented on top of deep modifications of the base Transformer (Vaswani 2017) architecture, setting up new standard of parameter- and inference compute efficiency and making Deepmind’s Chinchilla and Meta’s LLaMA obsolete, as they have made obsolete the preceding scaling laws by Kaplan et al. I offer an educated guess on specific technical methods by which this was achieved.

---{ Under construction }---

What we know

Incentive structure for training a large generative model

If you are a private research lab (Deepmind, Google Brain, Meta Research) owned by an incumbent corporation, you optimize for some mix of the following:

- Comparability with baselines (notably, LLaMA authors wrote that they applied basic transformer architecture in lieu of more efficient alternatives to keep their project comparable to Deepmind’s Chinchilla).

- Accuracy on academic benchmarks.

- Scientific novelty.

- Accuracy on parent company’s problems (with or without finetuning).

If you are a for-profit product-oriented organization like OpenAI, your incentives are different in important ways:

- You don’t care much about comparability with baselines – you are free to stack powerful enhancements to see where this takes you.

- You care less about academic benchmarks (although it’s good for PR to beat them).

- You care a lot about delivering a successful product – a model useful for 0-shot execution of customer’s tasks that can also be finetuned by client startups to provide novel profitable products to end-users.

- You can and do optimize your foundational model architecture for inference efficiency and compatibility with advanced engineering optimizations, as you care a lot about inference economy.

Deep learning trick stacking: most tricks don’t scale

A large part of published DL papers deals with some methods of enhancing the model’s performance. The most important takeways here are:

- Only a small ratio of published “tricks” end up contributing to enhanced language model performance at scale.

- This necessitates costly experiments to de-risk promising enhancements (hopefully done by your competitor, i.e. Google, and published in the open), or schemes to study these techniques in smaller models, or even falling back to subjective selection criteria.